Hello I'm

Muhammad Umar

I am an Erasmus Mundus Scholar and Master's student in Intelligent Field Robotic Systems (IFRoS). The joint master program is taught at Universitat de Girona, Spain and Eötvös Loránd University, Budapest. I am currently working as a Master's Thesis Student at Eurecat Technology Center, Barcelona on ground robotic systems. My research topic is vision based reactive navigation for agricultural robotics applications. I am passionate about space robotics and my ultimate goal is to work with a space program and revolutionize the space industry by bringing innovation through a mix of robotics and AI

Projects

Package Delivery Robot using AgileX Scout Robot

Frontier-Based Exploration using Hybrid-A* Planner

EKF-SLAM using Corner Features

Object Recognition using PyTorch in ROS

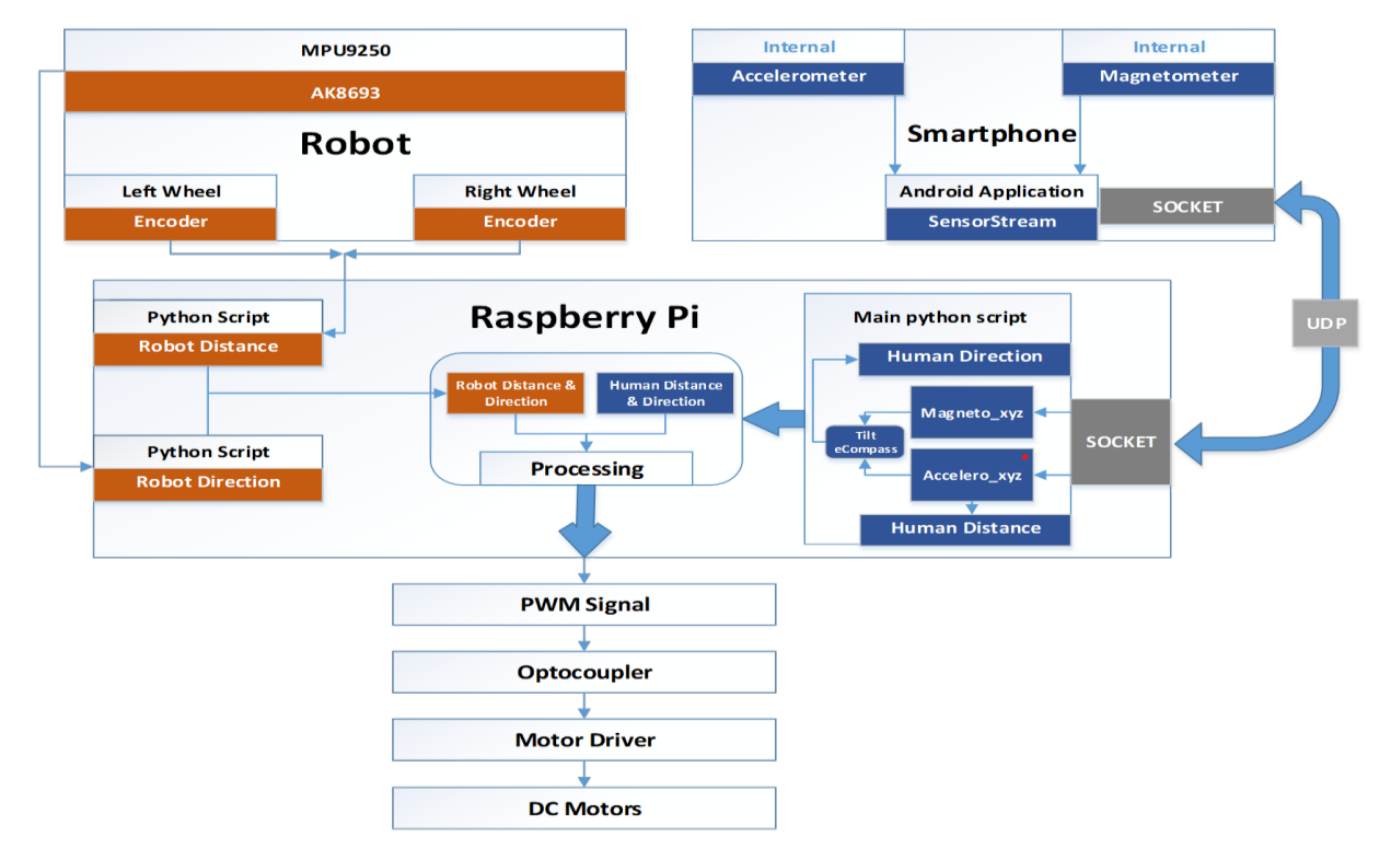

TRACK-E: Smartphone's IMU-Based Human Following Robot (Bachelor's Final Year Project)

Stereo Vision: Matching and Display

- Naive Stereo Matching

- Dynamic Programming

- OpenCV's StereoSGBM

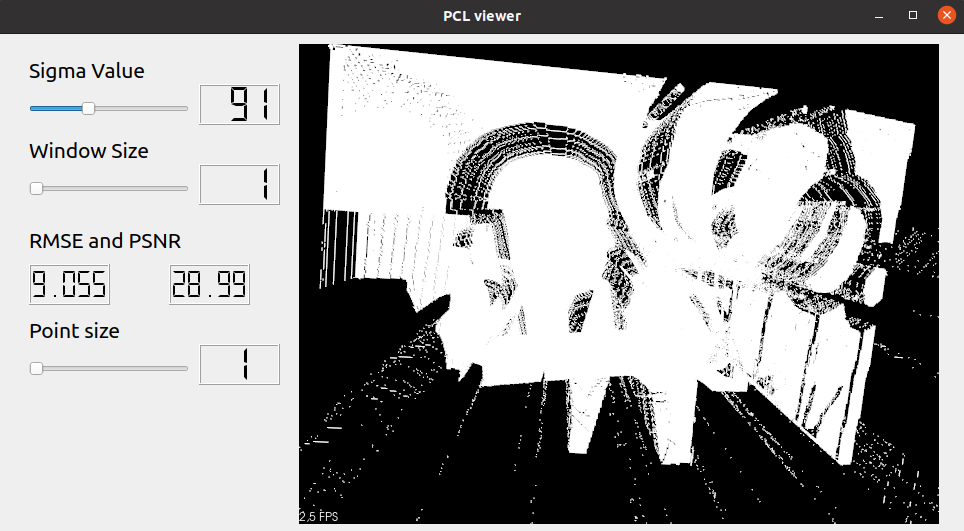

Image Filters using Point Cloud Library with QT5/VTK GUI

- Bilateral Filter

- Bilateral Median Filter

- Joint Bilateral and Joint Bilateral Median Filter

- Joint Bilateral Upsampling and Joint Bilateral Median Upsampling

- Iterative Upsampling

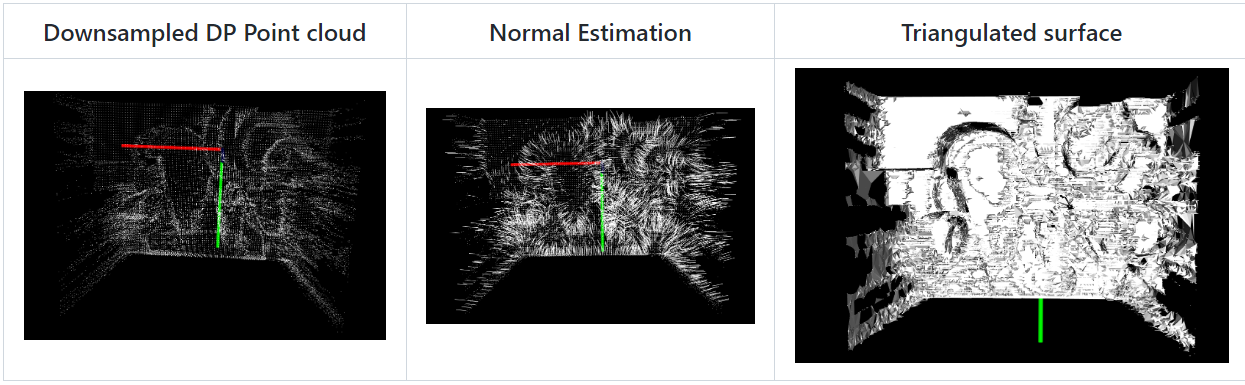

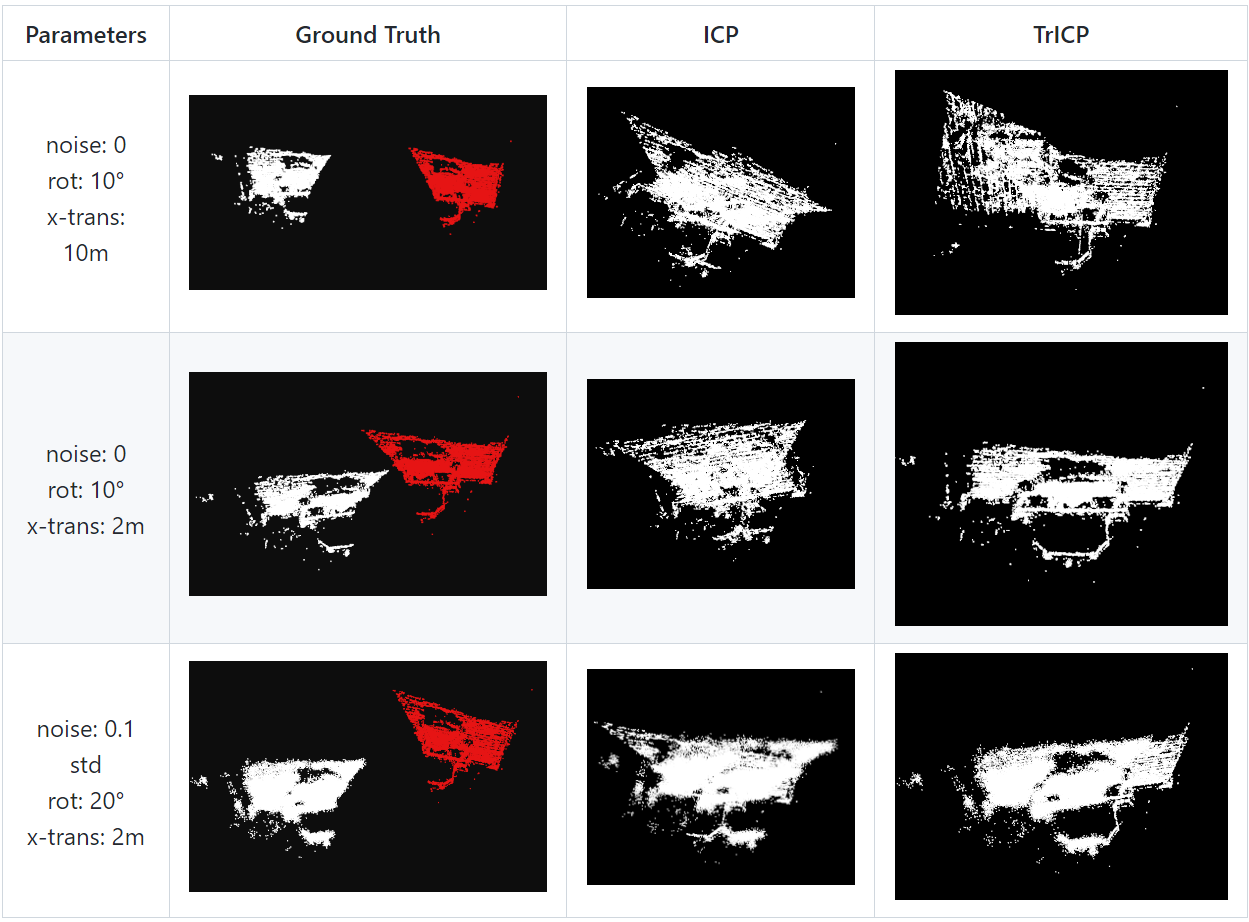

PointCloud Reconstruction using ICP and TrICP

- Nearest Neighbour search (kd-trees using nanoflann)

- Rotation Estimation (using SVD)

- Iterative Closest Point (ICP)

- Trimmed Iterative Closest Point (TrICP)

Deep Learning Projects

- Using PyTorch and Transfer Learning trained an image classifier on fruits-360 dataset with VGG16

- Fine-tuned Mask R-CNN on custom dataset (sunflowers) for segmentation.

- Implemented encoder architecture and fine-tuned encoder-decoder on COCO image captioning dataset.

Research Work